As a species, we’ve always sought to do less while achieving more—a principle that has driven centuries of innovation. Today, artificial intelligence (AI) embodies this spirit, offering unprecedented tools to streamline work, enhance decision-making, and unlock new opportunities. As the modern workforce embarks on its AI journey, organizations must embrace this technology thoughtfully, setting clear boundaries to guide its use. The risks to data quality, integrity, and security can lead to brand damage (a loss of trust that is super tough to crawl back from), decrease in growth (financial and market share) and legal liabilities… if left unchecked. Creating a company AI policy is now an immediate need to take advantage of the AI revolution and ensure your business stays ahead of the curve.

A policy that educates, informs, inspires, and provides direction for staff usage of AI tools. It’s a welcome guide for the modern workforce, helping organizations strike the right balance while aiming to achieve sustainable growth.

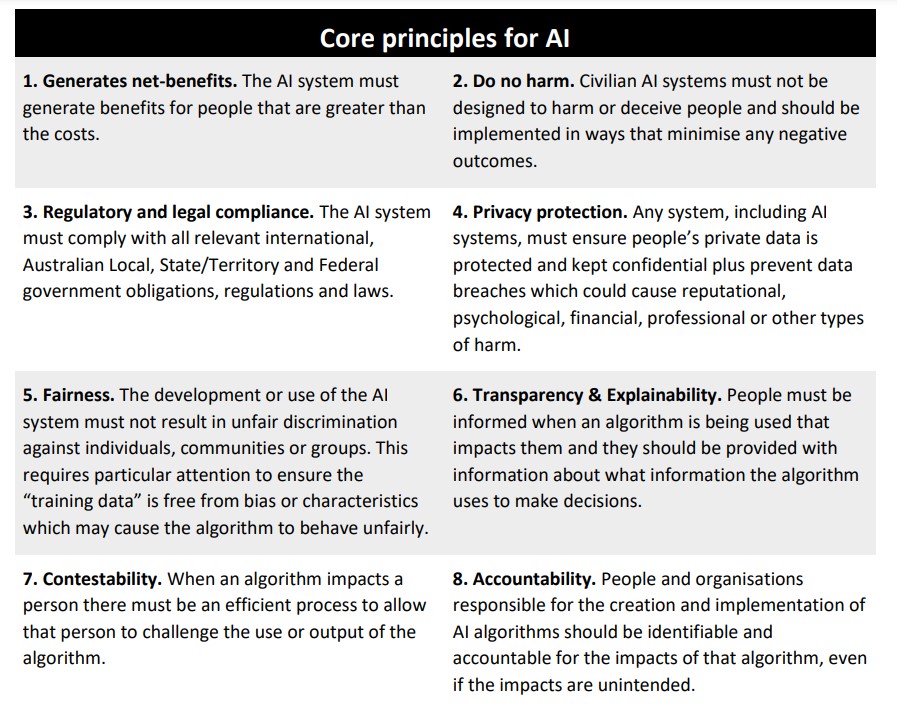

Drawing from Australia’s Ethics Framework for Artificial Intelligence, this article outlines the risks of unmanaged AI, the importance of governance, and actionable steps to help leaders develop an effective company AI policy.

The Role of AI in the Modern Workplace

Across industries, AI tools are used to make businesses faster, smarter, and more efficient. From small startups to global corporations, these technologies are helping companies:

- Save time by automating repetitive tasks.

- Reduce costs through data-driven decision-making.

- Innovate by enabling faster experimentation and ideation.

But with great power comes great responsibility. AI tools, while powerful, don’t operate in a vacuum. Their effectiveness—and their risks—are directly tied to how they’re used and governed.

As we’ll explore in later sections, implementing a company AI policy ensures these tools are used responsibly, ethically, and effectively. With the right boundaries and guidance, AI can be a game-changer for your organization without becoming a liability.

First let’s do a quick recap on the most common types of AI.

Common Types of AI

Artificial intelligence (AI) tools are no longer the stuff of science fiction—they’re tools that many of us already interact with daily, often without realizing it. From chatbots that assist customers online to algorithms that predict what you might want to buy next, AI is quietly becoming the backbone of modern business operations. But what exactly are AI tools, and how are they being used in companies today?

At its core, AI refers to systems or tools that mimic human intelligence to perform tasks. These systems can analyze data, make decisions, and generate content based on patterns and inputs.

Let’s break it down further into a few key categories of AI tools and how they’re applied:

Generative AI

Generative AI refers to tools that create new content based on patterns they’ve learned from existing data. These tools can generate text, images, audio, and even code.

How it Works: Generative AI uses algorithms trained on large datasets to produce outputs that mimic human creativity. For example, a tool like ChatGPT is trained on millions of texts to understand sentence structures, context, and tone. By analyzing input prompts, it predicts the next words to form coherent responses. These tools rely heavily on their training data, which shapes their capabilities and limitations.

Examples in Action:

-

- Writing product descriptions or marketing materials.

- Generating designs for advertisements or campaigns.

- Creating drafts for legal documents or internal communications.

Why It Matters: While these tools save time and boost creativity, they’re not perfect. Outputs can sometimes include errors or biases, making human oversight essential.

Large Language Models (LLMs): Where They Fit In

LLMs, such as OpenAI’s GPT (Generative Pre-trained Transformer), are a specific type of AI designed to process and generate human-like text. They underpin many generative AI tools and are built on massive datasets to enable advanced natural language understanding and generation.

- How LLMs Work: LLMs are trained on billions of text examples, allowing them to predict and generate sequences of words that feel natural and contextually relevant. Using deep learning techniques, they analyze relationships between words and concepts in their training data to create responses that align with user prompts. Their ability to understand nuance, context, and even humor is what makes them particularly powerful. However, they are limited by their training data and lack true understanding, meaning their outputs can sometimes be misleading or incorrect.

Predictive Analytics and Machine Learning

Predictive analytics and machine learning focus on identifying patterns in data to forecast future trends and outcomes. These tools excel in decision-making and problem-solving.

How It Works: Machine learning models are trained on historical data using algorithms that adjust and improve their performance over time. For instance, a predictive analytics tool might analyze years of sales data to predict future trends, continuously refining its predictions as it receives new information. The “learning” happens as the model identifies which patterns lead to accurate outcomes, enabling it to improve with more data exposure.

Examples in Action:

-

- Forecasting sales or inventory needs based on historical data.

- Identifying potential risks in financial transactions or supply chains.

- Personalizing customer experiences by predicting preferences.

Why It Matters: Predictive tools can help companies stay ahead of trends, but they rely on the quality of the data they’re fed. Poor data leads to poor predictions, which can have serious consequences.

Automation Tools

AI-powered automation tools are designed to perform repetitive, rule-based tasks quickly and accurately, reducing the need for human intervention.

How It Works: Automation tools integrate AI with workflow systems to execute predefined processes, often in real-time. For example, in customer service, a chatbot might instantly pull customer data, recognize the nature of the query, and generate a response—all without human involvement. These tools leverage AI to adapt workflows dynamically, identifying efficiencies that traditional rule-based automation might miss.

Examples in Action:

-

- Automating invoice processing in finance departments.

- Screening resumes for HR teams.

- Managing email workflows or customer service inquiries.

Why It Matters: Automation tools free up employees to focus on strategic work. However, improper implementation or over-reliance can lead to mistakes or missed opportunities for personal interaction.

The Risks of Not Having a Company AI Policy

AI tools are rapidly becoming part of everyday workflows, but here’s the reality: even without a company policy, your staff will use them anyway. And when they do so without clear direction or boundaries, the risks multiply. Employees may feed sensitive data into AI tools, unaware of where that data goes or how it might be used. This rogue usage leaves organizations exposed to risks that can damage their reputation, finances, and compliance standing.

Here are the most significant risks of unmanaged AI use:

Data Privacy and Security Risks

AI tools often process large amounts of data to deliver results, but when employees input sensitive information without guidelines, the organization loses control over that data.

- The Challenge: Many AI platforms store user inputs on external servers, which could include sensitive customer details, financial data, or proprietary strategies. Without a policy, employees may unwittingly expose this information.

- The Consequences: A data breach or misuse of this data could lead to regulatory fines, legal actions, and a loss of trust with customers and partners.

Intellectual Property Risks

AI tools don’t just analyze data—they often learn from it. When employees use these tools without rules, proprietary company information could become part of an AI system’s broader training dataset.

- The Challenge: AI tools may retain and reuse inputs, which could result in proprietary ideas, designs, or strategies being leaked or used by competitors.

- The Consequences: Losing control of intellectual property undermines a company’s competitive advantage and puts trade secrets at risk.

Compliance and Legal Risks

Even in their early stages, AI regulations are clear about one thing: companies are accountable for how they use AI tools. Without a policy, employees may unknowingly break the rules.

- The Challenge: Regulations like GDPR and other data protection laws require strict controls over how personal data is handled. Employees using AI tools for convenience might inadvertently violate these standards.

- The Consequences: Non-compliance can result in hefty fines, legal challenges, and reputational damage—especially in highly regulated sectors like finance or healthcare.

Reputational Damage

AI outputs aren’t perfect, and when employees use AI tools unchecked, they could produce biased, offensive, or inaccurate content that reflects poorly on the company.

- The Challenge: Without guidance, employees may rely too heavily on AI-generated outputs, which could misrepresent facts or perpetuate unintended biases.

- The Consequences: Public backlash, customer dissatisfaction, and even employee morale issues can arise when AI tools are misused.

Employee Misuse or Over-Reliance

When there’s no policy to guide them, employees are left to experiment. While experimentation can be valuable, it can also lead to misuse or an over-reliance on AI.

- The Challenge: Employees may use AI tools to bypass quality control or even for tasks that are inappropriate, like processing confidential data or creating external communications without oversight.

- The Consequences: Over-reliance on AI can lead to mistakes, decreased critical thinking, and even ethical missteps that harm the company’s reputation.

AI is here to stay, and employees will find ways to use it—whether or not your company has a policy. A well-crafted company AI policy sets clear rules for how AI tools should (and shouldn’t) be used. It protects your organization from these risks while empowering employees to use AI responsibly and effectively.

Why AI Governance Matters

AI Governance isn’t just about avoiding mistakes—it’s about creating the right environment for AI to thrive in your organization. Without a structured approach, AI can easily become a liability instead of the transformative tool it promises to be.

Turning Risks into Opportunities

AI governance helps you get ahead of potential challenges like data misuse, compliance issues, or reputational risks. But it also does more than just mitigate risks—it positions your company to lead in a rapidly evolving AI-driven world.

- How It Helps: When employees know the boundaries and expectations, they can confidently use AI tools to innovate without fear of stepping over legal or ethical lines.

- Example: Companies with strong governance policies often avoid high-profile mishaps, such as data breaches or AI biases, and instead gain trust from customers and stakeholders.

Governance Builds Trust

AI governance signals to stakeholders—whether customers, regulators, or employees—that your organization takes responsible AI use seriously.

- For Customers: It shows that their data is being handled securely and ethically.

- For Regulators: It demonstrates that your company is ahead of the curve in compliance and ethical practices.

- For Employees: It creates a culture of accountability, empowering them to use AI confidently while staying aligned with company policies.

Your Company AI Policy as the Core of Governance

A Company AI policy isn’t just a set of rules; it’s the foundation of your governance strategy. It answers critical questions like:

- What tasks can AI tools be used for?

- Who is responsible for overseeing AI usage and outputs?

- How will the organization ensure compliance and manage risks?

With a clear AI policy, your company has a blueprint for consistency, ensuring that employees, tools, and processes align with your goals.

Governance That Evolves With AI

Your governance framework must keep pace with rapidly evolving space.

A robust governance framework:

- Includes regular reviews to adapt to new technologies and regulations.

- Establishes feedback loops to incorporate lessons learned from AI usage.

- Encourages ongoing employee training to address new opportunities and risks.

Governance isn’t a one-and-done effort—it’s a commitment to evolving alongside AI. Governance isn’t about creating barriers; it’s more about unlocking the full potential of AI while protecting your organization from avoidable risks. With strong governance in place using Australia’s AI Ethics Principles as the backbone, AI can become a powerful enabler of innovation, efficiency, and trust.

How to Create a Company AI Policy

Establishing a company AI policy is essential to guide ethical and effective use of AI tools. It not only sets clear boundaries for employees but also builds trust with customers and stakeholders. Here’s how to develop a robust policy, step by step, while embedding change management principles to ensure successful adoption.

Assemble a Cross-Functional Team

Start by forming a diverse team that includes representatives from key departments such as IT, legal, compliance, HR, and operations.

- Actionable Tip: In smaller organizations, this may mean adding AI governance responsibilities to existing roles.

- Change Management Focus: Involve employees from different levels and functions to encourage buy-in and ensure the policy reflects the needs of the broader workforce.

Conduct an AI Usage Audit

Evaluate how AI tools are currently being used across the organization. Identify tools that employees have adopted informally, as well as formal systems already in place.

- Actionable Tip: Map out where data is being processed, stored, and shared to uncover potential risks.

- Change Management Focus: Engage employees during this audit to understand their current pain points and how AI tools are being used to address them.

Define Acceptable Use Cases and Boundaries

Set clear guidelines on how AI tools should—and shouldn’t—be used in the workplace.

- Actionable Tip: Specify tasks where AI is approved (e.g., automating repetitive processes) and prohibited (e.g., handling sensitive data or critical decision-making without oversight).

- Change Management Focus: Clearly communicate the “why” behind these boundaries to build awareness and help employees understand how the policy benefits them and the organization.

Develop Data Governance and Security Protocols

AI relies heavily on data, so your policy must include strong governance measures.

- Actionable Tip:

- Establish rules for inputting data into AI systems, ensuring compliance with privacy laws like GDPR.

- Implement monitoring tools to track AI usage and restrict unauthorized tools on company networks.

- Change Management Focus: Create a feedback loop so employees can voice concerns about how data is handled, ensuring transparency.

Write the Policy and Provide Training

Draft the policy in clear, accessible language, ensuring it’s easy for all employees to understand.

- Actionable Tip:

- Include specific sections on data handling, ethical considerations, acceptable tools, and reporting structures.

- Roll out employee training programs to educate staff on the policy and how to use AI tools responsibly.

- Change Management Focus: Treat the policy rollout as a key change initiative. Use a change manager (if resources allow) to oversee employee engagement, resolve resistance, and align the training with company culture.

Appoint Accountability and Monitoring Roles

Establish accountability by designating a team or individual responsible for overseeing AI governance and compliance.

- Actionable Tip: Ensure roles are clearly defined and include reporting mechanisms to address issues or escalate concerns.

- Change Management Focus: Make sure the governance team is seen as a resource, not an enforcer, to build trust and foster collaboration.

Monitor, Evaluate, and Update the Policy

AI tools evolve rapidly, and so will your organization’s needs. Schedule regular reviews to ensure the policy stays relevant.

- Actionable Tip: Use employee feedback and emerging best practices to refine the policy over time.

- Change Management Focus: Continue engaging employees throughout this process to maintain transparency and ensure the policy evolves in line with their needs.

The Role of Change Management

Integrating change management principles into your policy development ensures:

- Employee Engagement: Employees feel heard and involved, reducing resistance to the policy.

- Awareness and Adoption: Clear communication about the purpose and benefits of the policy fosters understanding and alignment.

- Seamless Implementation: A structured approach to managing change minimizes disruption and ensures the organization effectively adopts the policy.

Feel free to reach out to the team at Growth Strategies 101 for a confidential and obligation-free discussion on how you can implement a Company AI policy for growth and sustainability.